- Spark Transformation Example

- Spark Transformations And Actions Cheat Sheet Answers

- Spark Transformations And Actions Cheat Sheet Free

Basic data munging operations: structured data

SPARK & RDD CHEAT SHEET Spark & RDD Basics It is an open source, Hadoop compatible fast and expressive cluster computing platform A p a c h e S p a r k The core concept in Apache Spark is RDD (Resilient Distributed Datasheet), which is an immutable distributed collection of data which is partitioned across machines in a cluster. The transformations are only computed when an action requires a result to be returned to the driver program. With these two types of RDD operations, Spark can run more efficiently: a dataset created through map operation will be used in a consequent reduce operation and will return only the result of the the last reduce function to the driver. That way, the reduced data set rather than the larger mapped.

This page is developing

| Python pandas | PySpark RDD | PySpark DF | R dplyr | Revo R dplyrXdf | |

|---|---|---|---|---|---|

| subset columns | df.colname, df['colname'] | rdd.map() | df.select('col1', 'col2', ..) | select(df, col1, col2, ..) | |

| new columns | df['newcolumn']=.. | rdd.map(function) | df.withColumn(“newcol”, content) | mutate(df, col1=col2+col3, col4=col5^2,..) | |

| subset rows | df[1:10], df.loc['rowname':] | rdd.filter(function or boolean vector), rdd.subtract() | filter | ||

| sample rows | rdd.sample() | ||||

| order rows | df.sort('col1') | arrange | |||

| group & aggregate | df.sum(axis=0), df.groupby(['A', 'B']).agg([np.mean, np.std]) | rdd.count(), rdd.countByValue(), rdd.reduce(), rdd.reduceByKey(), rdd.aggregate() | df.groupBy('col1', 'col2').count().show() | group_by(df, var1, var2,..) %>% summarise(col=func(var3), col2=func(var4), ..) | rxSummary(formula, df)or group_by() %>% summarise() |

| peek at data | df.head() | rdd.take(5) | df.show(5) | first(), last() | |

| quick statistics | df.describe() | df.describe() | summary() | rxGetVarInfo() | |

| schema or structure | df.printSchema() |

..and there's always SQL

Syntax examples

Python pandas

PySpark RDDs & DataFrames

RDDs

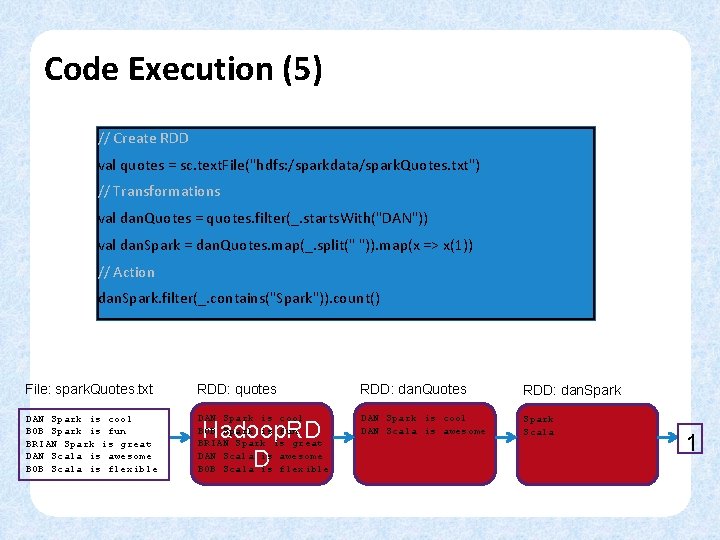

Transformations return pointers to new RDDs

- map, flatmap: flexible,

- reduceByKey

- filter

Actions return values

- collect

- reduce: for cumulative aggregation

- take, count

A reminder: how lambda functions, map, reduce and filter work

Partitions: rdd.getNumPartitions(), sc.parallelize(data, 500), sc.textFile('file.csv', 500), rdd.repartition(500)

Additional functions for DataFrames

If you want to use an RDD method on a dataframe, you can often df.rdd.function().

Miscellaneous examples of chained data munging:

Further resources

- My IPyNB scrapbook of Spark notes

- Spark programming guide (latest)

- Spark programming guide (1.3)

- Introduction to Spark illustrates how python functions like map & reduce work and how they translate into Spark, plus may data munging examples in Pandas and then Spark

R dplyr

The 5 verbs:

- select = subset columns

- mutate = new cols

- filter = subset rows

- arrange = reorder rows

- summarise

Additional functions in dplyr

- first(x) - The first element of vector x.

- last(x) - The last element of vector x.

- nth(x, n) - The nth element of vector x.

- n() - The number of rows in the data.frame or group of observations that summarise() describes.

- n_distinct(x) - The number of unique values in vector x.

Revo R dplyrXdf

Notes:

- xdf = 'external dataframe' or distributed one in, say, a Teradata database

- If necessary, transformations can be done using

rxDataStep(transforms=list(..))

Manipulation with dplyrXdf can use:

Spark Transformation Example

- filter, select, distinct, transmute, mutate, arrange, rename,

- group_by, summarise, do

- left_join, right_join, full_join, inner_join

- these functions supported by rx: sum, n, mean, sd, var, min, max

Further resources

Basic data munging operations: structured data

Spark Transformations And Actions Cheat Sheet Answers

This page is developing

| Python pandas | PySpark RDD | PySpark DF | R dplyr | Revo R dplyrXdf | |

|---|---|---|---|---|---|

| subset columns | df.colname, df['colname'] | rdd.map() | df.select('col1', 'col2', ..) | select(df, col1, col2, ..) | |

| new columns | df['newcolumn']=.. | rdd.map(function) | df.withColumn(“newcol”, content) | mutate(df, col1=col2+col3, col4=col5^2,..) | |

| subset rows | df[1:10], df.loc['rowname':] | rdd.filter(function or boolean vector), rdd.subtract() | filter | ||

| sample rows | rdd.sample() | ||||

| order rows | df.sort('col1') | arrange | |||

| group & aggregate | df.sum(axis=0), df.groupby(['A', 'B']).agg([np.mean, np.std]) | rdd.count(), rdd.countByValue(), rdd.reduce(), rdd.reduceByKey(), rdd.aggregate() | df.groupBy('col1', 'col2').count().show() | group_by(df, var1, var2,..) %>% summarise(col=func(var3), col2=func(var4), ..) | rxSummary(formula, df)or group_by() %>% summarise() |

| peek at data | df.head() | rdd.take(5) | df.show(5) | first(), last() | |

| quick statistics | df.describe() | df.describe() | summary() | rxGetVarInfo() | |

| schema or structure | df.printSchema() |

..and there's always SQL

Syntax examples

Python pandas

PySpark RDDs & DataFrames

RDDs

Transformations return pointers to new RDDs

- map, flatmap: flexible,

- reduceByKey

- filter

Actions return values

- collect

- reduce: for cumulative aggregation

- take, count

A reminder: how lambda functions, map, reduce and filter work

Partitions: rdd.getNumPartitions(), sc.parallelize(data, 500), sc.textFile('file.csv', 500), rdd.repartition(500)

Additional functions for DataFrames

If you want to use an RDD method on a dataframe, you can often df.rdd.function().

Miscellaneous examples of chained data munging:

Further resources

- My IPyNB scrapbook of Spark notes

- Spark programming guide (latest)

- Spark programming guide (1.3)

- Introduction to Spark illustrates how python functions like map & reduce work and how they translate into Spark, plus may data munging examples in Pandas and then Spark

R dplyr

The 5 verbs:

Spark Transformations And Actions Cheat Sheet Free

- select = subset columns

- mutate = new cols

- filter = subset rows

- arrange = reorder rows

- summarise

Additional functions in dplyr

- first(x) - The first element of vector x.

- last(x) - The last element of vector x.

- nth(x, n) - The nth element of vector x.

- n() - The number of rows in the data.frame or group of observations that summarise() describes.

- n_distinct(x) - The number of unique values in vector x.

Revo R dplyrXdf

Notes:

- xdf = 'external dataframe' or distributed one in, say, a Teradata database

- If necessary, transformations can be done using

rxDataStep(transforms=list(..))

Manipulation with dplyrXdf can use:

- filter, select, distinct, transmute, mutate, arrange, rename,

- group_by, summarise, do

- left_join, right_join, full_join, inner_join

- these functions supported by rx: sum, n, mean, sd, var, min, max

Further resources Eclipse for mac python.